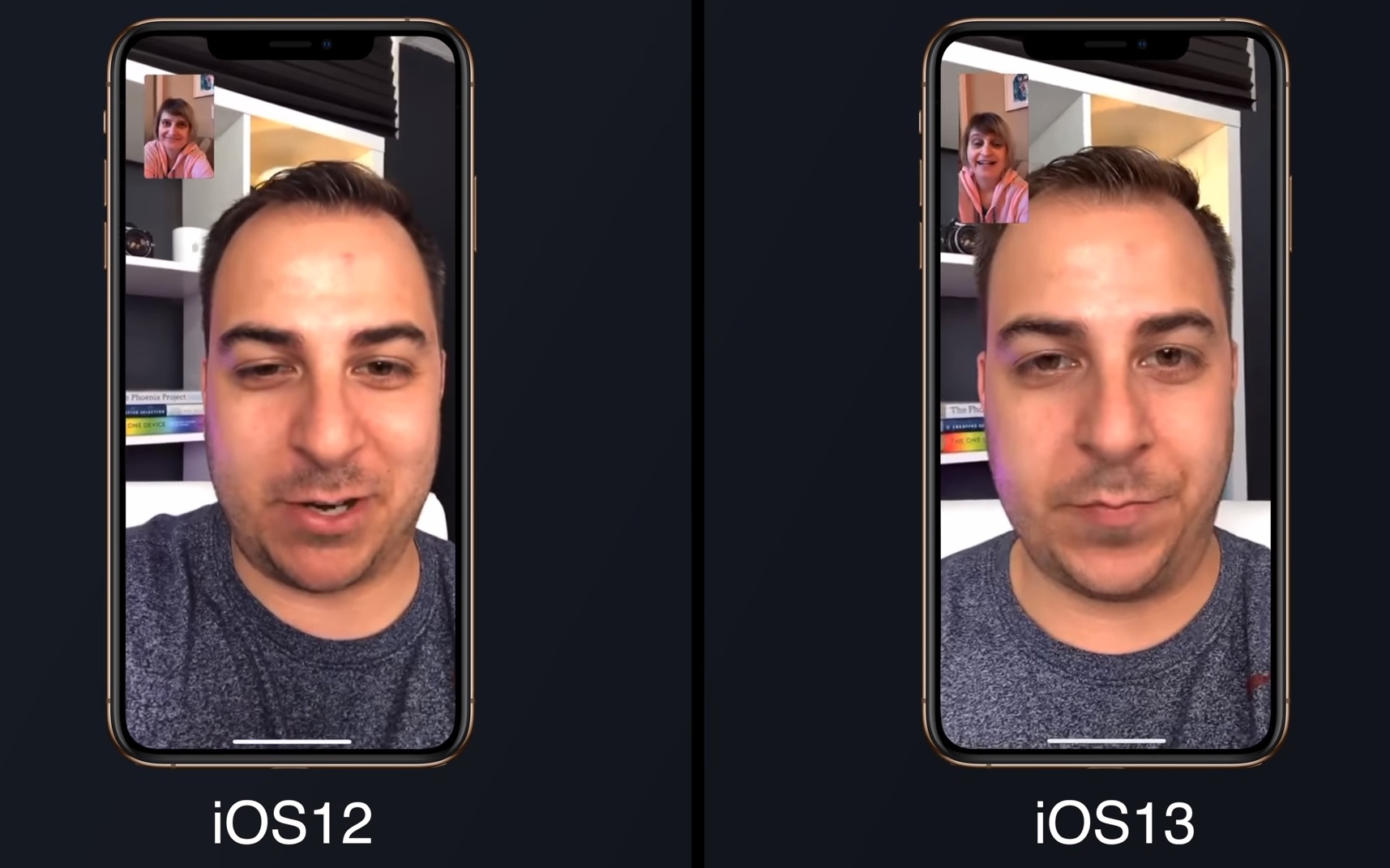

Apple had unveiled the latest iteration of its mobile operating system, iOS 13. With iOS 13 update, Apple has tried to fix some major issues with iOS 12, including the flawed FaceTime.

FaceTime is Apple’s official video chat that offers secure video calls on Apple devices. However, it can end up feeling a little bit ‘non-personal’ because most of us don’t make eye contact with the person we are talking to.

When we are talking to someone, we mostly look at the screen instead of the front facing camera which disrupts are eye contact with the person on the other side of the screen.

Even though it is not a flaw since manufacturers are forced to put the front-facing camera on the top of the screen. Apple, however, has come out with a fix that would help in a more authentic and natural experience while FaceTiming.

FaceTime Attention Correction

Apple has introduced FaceTime Attention Correction in iOS 12 beta 3. It was discovered by Mark Rundle who shared a screenshot of the toggle alongside an explanation.

Haven’t tested this yet, but if Apple uses some dark magic to move my gaze to seem like I’m staring at the camera and not at the screen I will be flabbergasted. (New in beta 3!) pic.twitter.com/jzavLl1zts

— Mike Rundle (@flyosity) July 2, 2019

FaceTime Attention Correction makes the user appear that he/she is making eye contact, but in reality, they are likely to look at the screen.

Guys – "FaceTime Attention Correction" in iOS 13 beta 3 is wild.

Here are some comparison photos featuring @flyosity: https://t.co/HxHhVONsi1 pic.twitter.com/jKK41L5ucI

— Will Sigmon (@WSig) July 2, 2019

How does Apple manage to do this?

A twitter user Dave Schukin put together a video explaining the working of Face Time Correction.

According to Dave, Face Time Correction uses an ARKit for grabbing a depth map-position of the face and adjusts the eyes accordingly.

How iOS 13 FaceTime Attention Correction works: it simply uses ARKit to grab a depth map/position of your face, and adjusts the eyes accordingly.

Notice the warping of the line across both the eyes and nose. pic.twitter.com/U7PMa4oNGN

— Dave Schukin (@schukin) July 3, 2019

When he moved an arm of his glasses in front of his face, we can see warping in front of his nose and eyes. In the end, it looks like Apple is using AR Magic to make it look like as if the user is gazing into the camera.

Currently, this feature is only present on iOS 13 beta 3 so will have to wait for the public release.