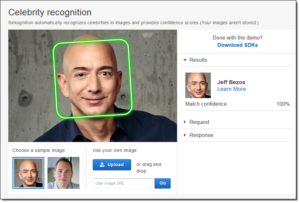

Rekognition, Amazon’s ‘racist’ and ‘sexist’ face recognition technology, is back in the news. In July 2018 researchers pointed out that the technology was facing trouble in identifying people with darker skin tones. New research now points out that the Rekognition AI might also be sexist.

Rekognition Has a Bias Against Women: Research

Research based in the media lab of the Massachusetts Institute of Technology shows that Rekognition has trouble identifying women. When the AI went through images of women, it had an error rate of 19%. Basically, 19% of the times, the AI identified women as men.

This error rate drops even further when women have a darker skin tone. Hence, the Rekognition AI was branded sexist and racist. Matt Wood, GM of AI at AWS, commented on this saying that Amazon is trying to improve the product.

Has IBM Solved the Bias Problem?

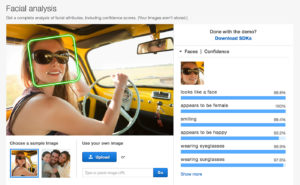

While Amazon finds itself in controversy, another industry giant seems to have found a solution to it. IBM has published a new dataset which appears to be relatively free of biases when compared to Rekognition. IBM tested their AI against publicly available images of human faces from Flickr’s creative common section. IBM’s dataset isn’t available for public use as of now.

IBM’s AI was able to differentiate based on the length of head and nose. Other factors included the symmetry of the face, the age and gender, etc. Amazon’s racist and sexist software might just learn a few things from IBM. The AI wars are getting interesting.

Source: TheRegister