SING is a neural audio synthesiser which can generate musical notes that are based on specific pitch, instrument, the force that plays the note aka velocity and much other input. The human appraisers have reportedly determined whether these notes which are created by Symbol-to-Instrument Neural Generator are natural or not, in other words, whether these notes are similar to the ones which are played on guitar, flute and other instruments.

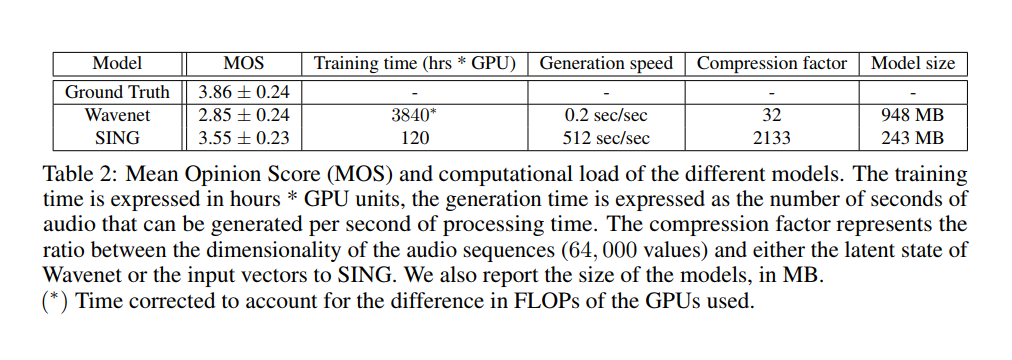

In most of the cases they evaluators found out that the notes from SING are much more realistic-sounding rather than the artificial intelligence networks, given that the system needs a fraction of time for audio generation and training. When SING is computed for a single second, it can produce around 512 seconds of audio.

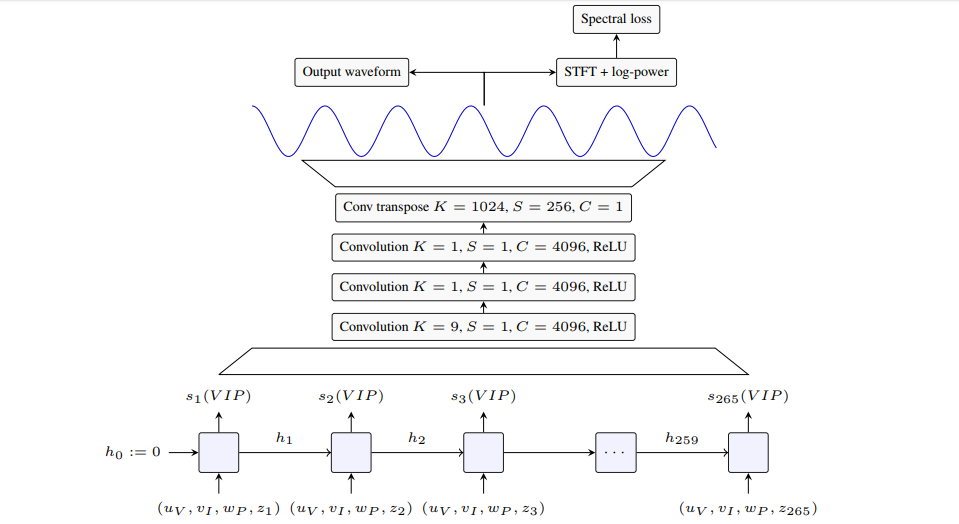

The AI-based audio generation system creates the samples of audio one at a time, and it also uses the similar kind of training data like the data sets of existing recordings of the musical notes, but the thing is, it produces the audio in a much larger batch by generating the waveform for almost 1,024 audio samples in a single go. Its end to end process significantly reduces the quantity of the computational power which is needed.

SING can easily generate the notes from 1000 different musical instruments with a lot of pitches per device and with five different level of force.

With SING many significant and news opportunities have come into being to create high-quality audio in real time which is also quite close to the music generated by musical instruments, particularly when those are compared with the traditional synthesisers. The research has the potential to be used for separating the song from its audio file for every single voice or instrument. The training times and the faster generation of the system were previously able to compute the heavy applications like automatic generation of music which is much more accessible to the researchers who are limited by resources.